A recurring theme with computational photography is the tension over creative choices. The “AI” features in cameras and photo editing apps can take over for many technical aspects, such as how to expose a scene or nail focus. Does that grab too much creative control from photographers? (See my last column, “You’re already using computational photography, but that doesn’t mean you’re not a photographer.”)

Image composition seems to be outside that tension. When you line up a shot, the camera isn’t physically pulling your arms to aim the lens at a better layout. (If it is pulling your arms, it’s time to consider a lighter camera or a sturdy tripod!) But software can affect composition in many circumstances—mostly during editing, but in some cases while shooting, too.

Consider composition

On the surface, composition seems to be the simplest part of photography. Point the camera at a subject and press or tap the shutter button. Experienced photographers know, however, that choosing where the subject appears, how it’s composed in the viewfinder/screen, and even which element is the subject, involves more work and consideration. In fact, composition is often truly the most difficult part of capturing a scene.

So where does computational photography fit into this frame?

In some ways, more advanced AI features such as HDR are easier to pull off. The camera, almost exclusively in a smartphone, captures a dozen or so shots at different exposures within a few milliseconds and then combines them to build a well-lit photo. It’s gathering a wide range of data and merging it all together.

To apply AI smarts to composition, the camera needs to understand as well as you do what’s in the viewfinder. Some of that happens: smartphones can identify when a person is in the frame, and some can recognize common elements such as the sky, trees, mountains, and the like. But that doesn’t help when determining which foreground object should be prominent and where it should be placed in relation to other objects in the scene.

Plus, when shooting, the photographer is still the one who controls where the lens is pointed. Or is it? I joke about the camera dragging your arms into position, but that’s not far from describing what many camera gimbal devices do. In addition to minimizing camera movement for smooth movement, a gimbal can identify a person and keep them centered in the frame.

But let’s get back to the camera itself. If we have control over the body and where it’s pointed, any computational assistance would need to come from what the lens can see. One way of doing that is by selecting a composition within the pixels the sensor records. We do that all the time during editing by cropping, and I’ll get to that in a moment. But let’s say we want an algorithm to help us determine the best composition in front of us. Ideally, the camera would see more than what’s presented in the viewfinder and choose a portion of those pixels.

Well, that’s happening too, in a limited way. Last year Apple introduced a feature called Center Stage in the 2021 iPad, iPad mini, and iPad Pro models. The front-facing camera has an ultra-wide 120-degree field of view, and the Center Stage software reveals just a portion of that, making it look like any normal video frame. But the software also recognizes when a person is in the shot and adjusts that visible area to keep them centered. If another person enters the space, the camera appears to zoom out to include them, too. (Another example is the Logitech StreamCam, which can follow a single person left and right.) The effect feels a bit slippery, but the movement is pretty smooth and will certainly improve.

Composition in editing

You’ll find more auto-framing options in editing software, but the results are more hit and miss.

The concept mirrors what we do when sitting down to edit a photo: the app analyzes the image and detects objects and types of scenes, and then uses that insight to choose alternate compositions by cropping. Many of the apps I’ve chosen as examples use faces as the basis for recomposing; Apple’s Photos app only presents an Auto button in the crop interface when a person is present or when an obvious horizon line suggests that the image needs straightening.

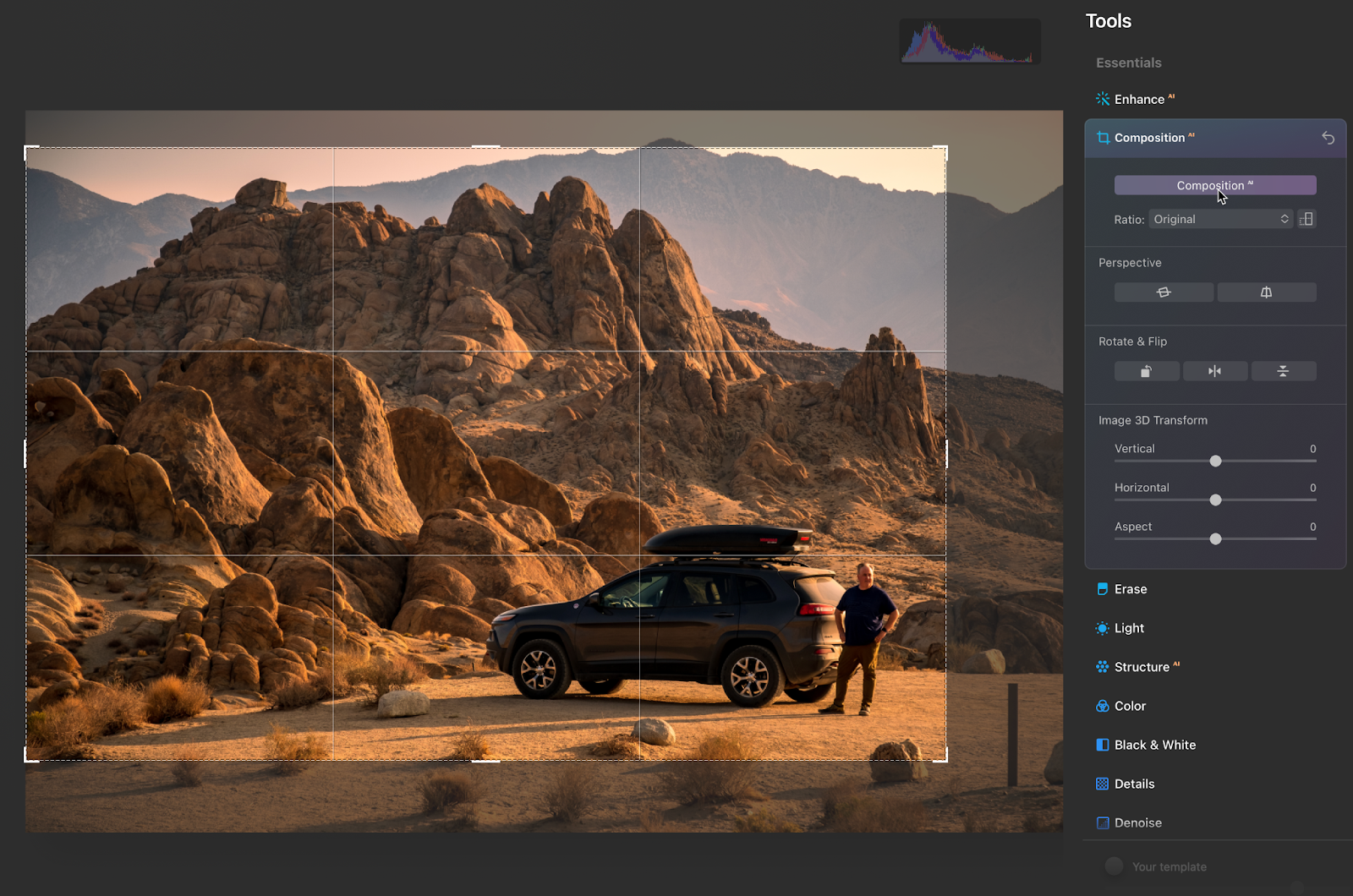

I expected better results in Luminar AI using its Composition AI tool, because the main feature of Luminar AI is the fact that it analyzes every image when you start editing it to determine the contents. It did a good job with my landscape test photo, keeping the car and driver in the frame along with the rocks at the top-left, and removing the signpost in the bottom right. However, in the portrait (below), it did the opposite of what I was hoping for, by keeping the woman’s fingers in view at right and cropping out her hair on the left.

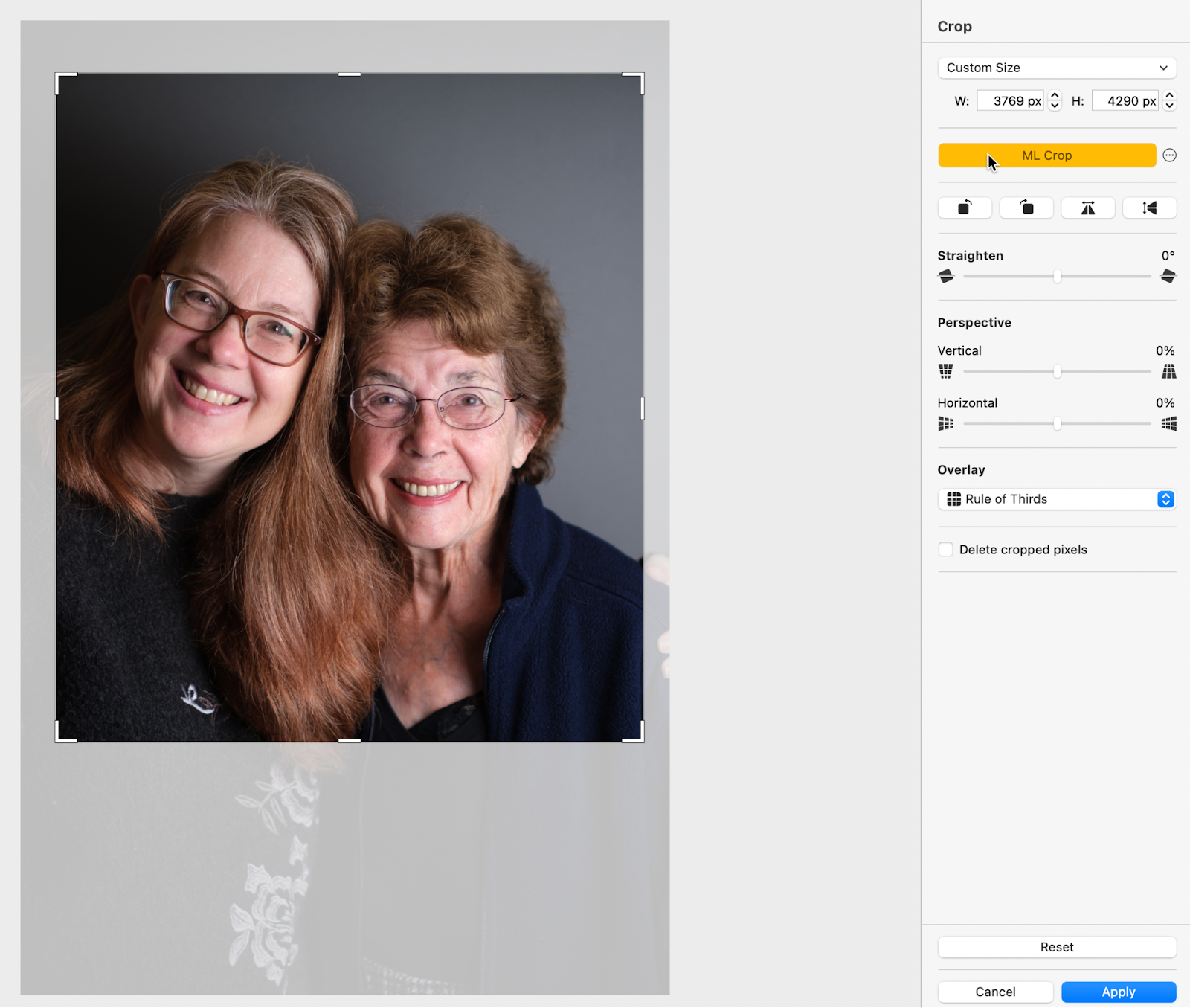

I also threw the two test images at Pixelmator Pro on macOS, since Pixelmator (the company) has been aggressive about incorporating machine-language-based tools into its editor. It framed the two women well in the portrait (below), although my preference would be to not crop as tightly as it did. In the landscape shot, it cropped in slightly, which improved the photo only slightly.

Adobe Lightroom and Photoshop do not include any automatic cropping feature, but Photoshop Elements does offer four suggested compositions in the Tool Options bar when the Crop tool is selected. However, it’s not clear what the app is using to come up with those suggestions, as they can be quite random.

Comp-u-sition? (No, that’s a terrible term)

It’s essential to note here that these are all starting points. In every case, you’re presented with a suggestion that you can then manipulate manually by dragging the crop handles.

Perhaps that’s the lesson to be learned today: computational photography isn’t all about letting the camera or software do all the work for you. It can give you options or save you some time, but ultimately the choices you make are still yours. Sometimes seeing a poor reframing suggestion can help you realize which elements in the photo work and which should be excised. Or, you may apply an automatic adjustment, disagree (possibly vehemently), and do your own creative thing.

The post Composition in the age of AI: Who’s really framing the shot? appeared first on Popular Photography.