Computational photography technologies aim to automate tasks that are time-consuming or uninspiring: Adjusting the lighting in a scene, replacing a flat sky, culling hundreds of similar photos. But for a lot of photographers, assigning keywords and writing text descriptions makes those actions seem thrilling.

When we look at a photo, the image is supposed to speak for itself. And yet it can’t in so many ways. We work with libraries of thousands of digital images, so there’s no guarantee that a particular photo will rise to the surface when we’re scanning through screenfuls of thumbnails. But AI can assist.

Keywords, terms, descriptors, phrases, expressions…

I can’t overemphasize the advantages of applying keywords to images. How many times have you found yourself scrolling through your photos, trying to recall when the ones you want were shot? How often have you scrolled right past them, or realized they’re stored in another location? If those images contained keywords, the shots could often be found in just a couple of minutes or less.

The challenge is tagging the photos at the outset.

It seems to me that people fall on the far ends of the keywording spectrum. On one side is a hyper-descriptive approach, where the idea is to apply as many terms as possible to describe the contents of an image. These can branch into hierarchies and subcategories and related concepts and all sorts of fascinating but arcane miscellany.

On the other side is where I suspect most people reside: keywords are a time-consuming waste of effort. Photographers want to edit, not categorize!

This is where AI technologies are helping. Many apps use image detection to determine the contents of photos and use that data when you perform a search.

Related: Computational photography, explained: The next age of image-making is already here

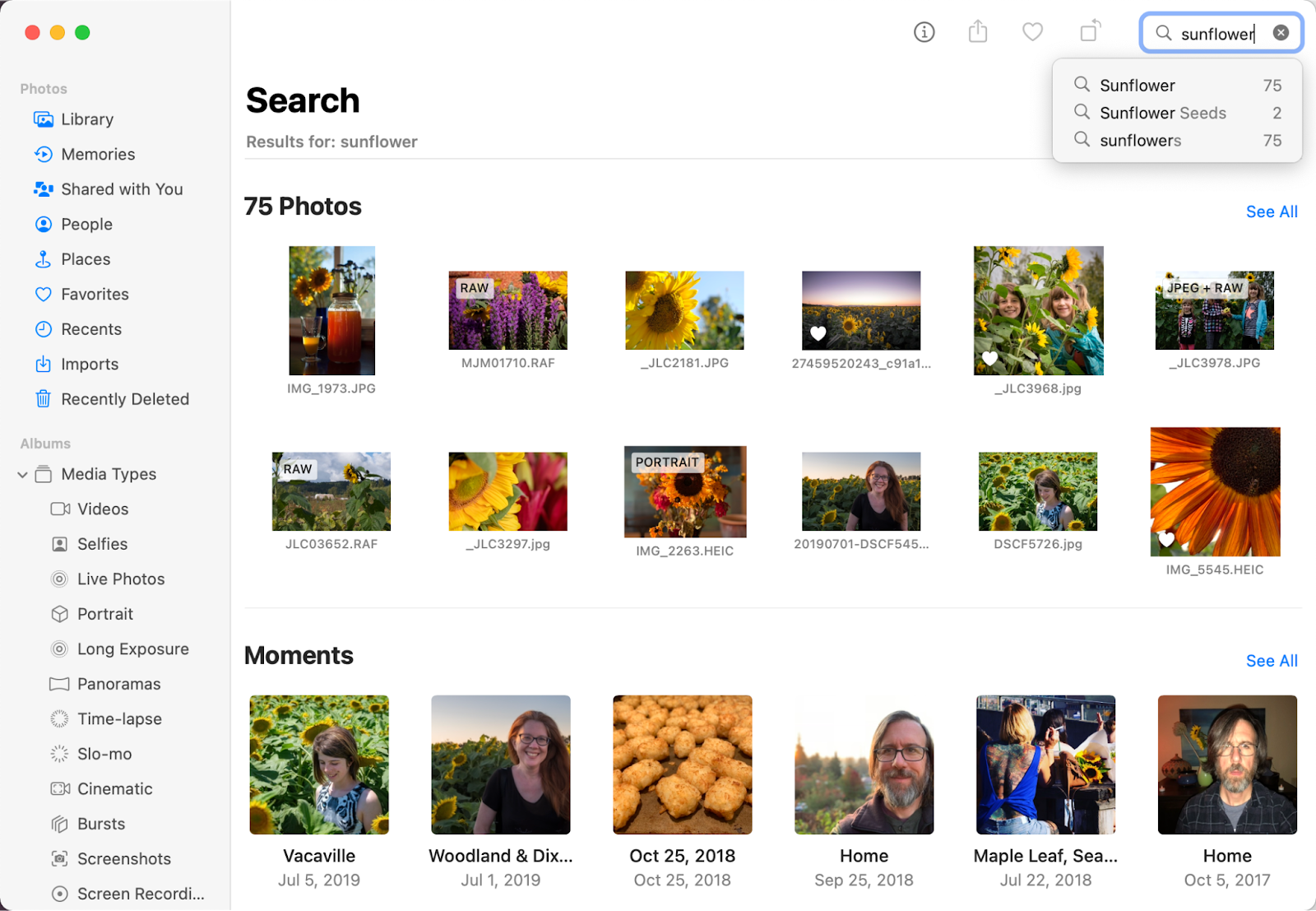

For example, in Apple Photos, typing “sunflower” brings up images in my library that contain sunflowers (and, inexplicably, a snapshot of tater tots). In each of these cases, I haven’t assigned a specific keyword to the images.

Similarly, Lightroom desktop (the newer app, not Lightroom Classic) takes advantage of Adobe Sensei technology to suggest results when I type “sunflower” in the Search field. Although some of my images are assigned keywords (at the top of the results list), it also suggested “Sunflower Sunset” as a term.

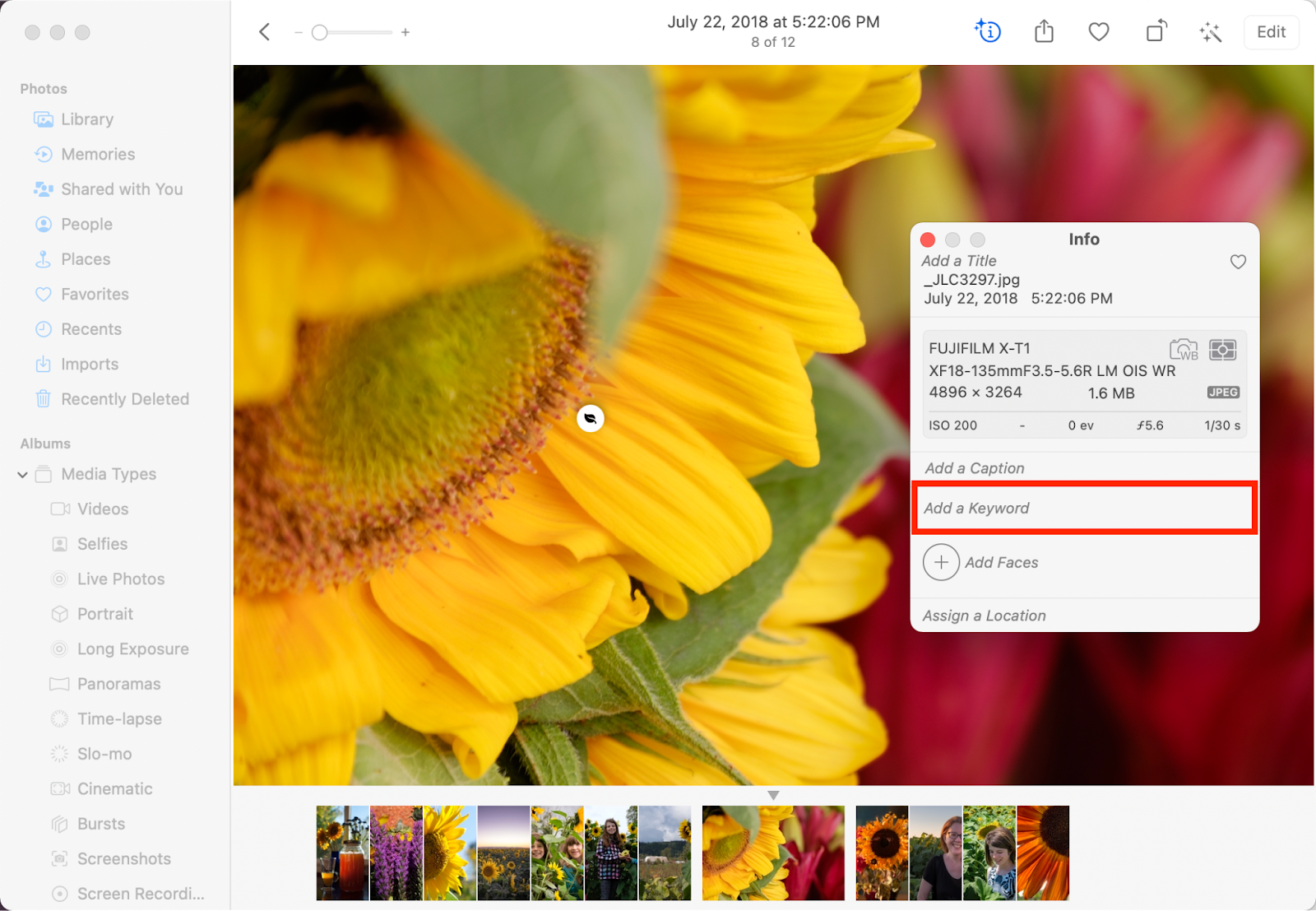

That’s helpful, but the implementation is also fairly opaque. Lightroom and Photos are accessing their own internal data rather than creating keywords that you can view.

What if you don’t use either of those apps? Perhaps your library is in Lightroom Classic or it exists in folder hierarchies you’ve created on disk?

Creating keywords with Excire Foto

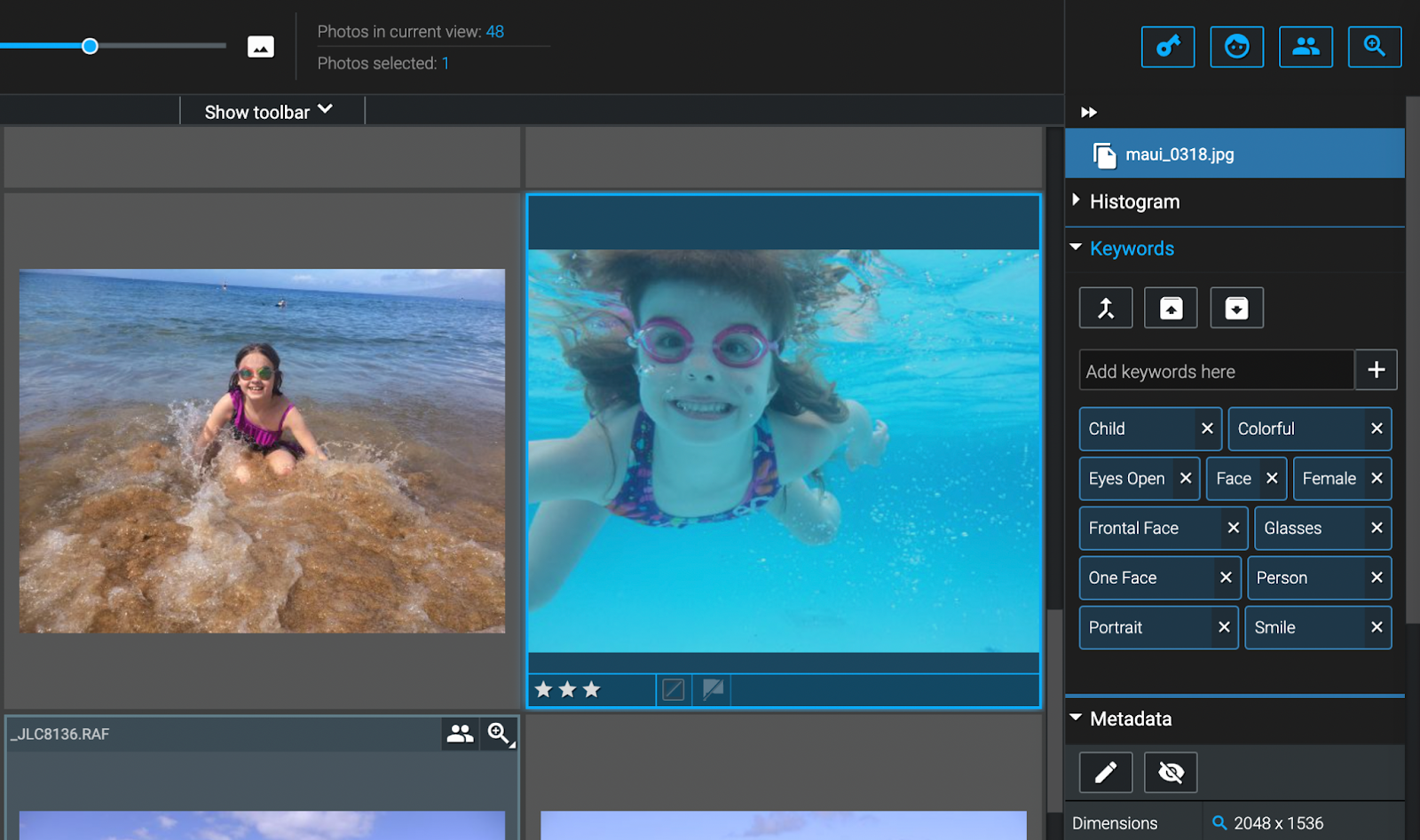

I took two tools from Excire for a quick spin to see what they would do. Excire Foto is a standalone app that performs image recognition on photos and generates exactly the kind of metadata I’m talking about. Excire Search 2 does the same, just as a Lightroom Classic plug-in.

I loaded 895 images into Exire Foto, which it scanned and tagged in just a couple of minutes. It did a great job of creating keywords to describe the images; with people, for instance, it differentiates between adults and children. You can add or remove keywords and then save them back to the image or in sidecar files for RAW images.

So if the thought of adding keywords makes you want to stand up and do pretty much anything else, you can now get some of the benefits of keywording without doing the grunt work.

Generating ‘alt text’ for images

Text isn’t just for applying keywords and searching for photos. Many people who are blind or visually impaired still encounter images online, relying on screen reader technology to read the content aloud. So it’s important, when sharing images, to include alternative text that describes their content whenever possible.

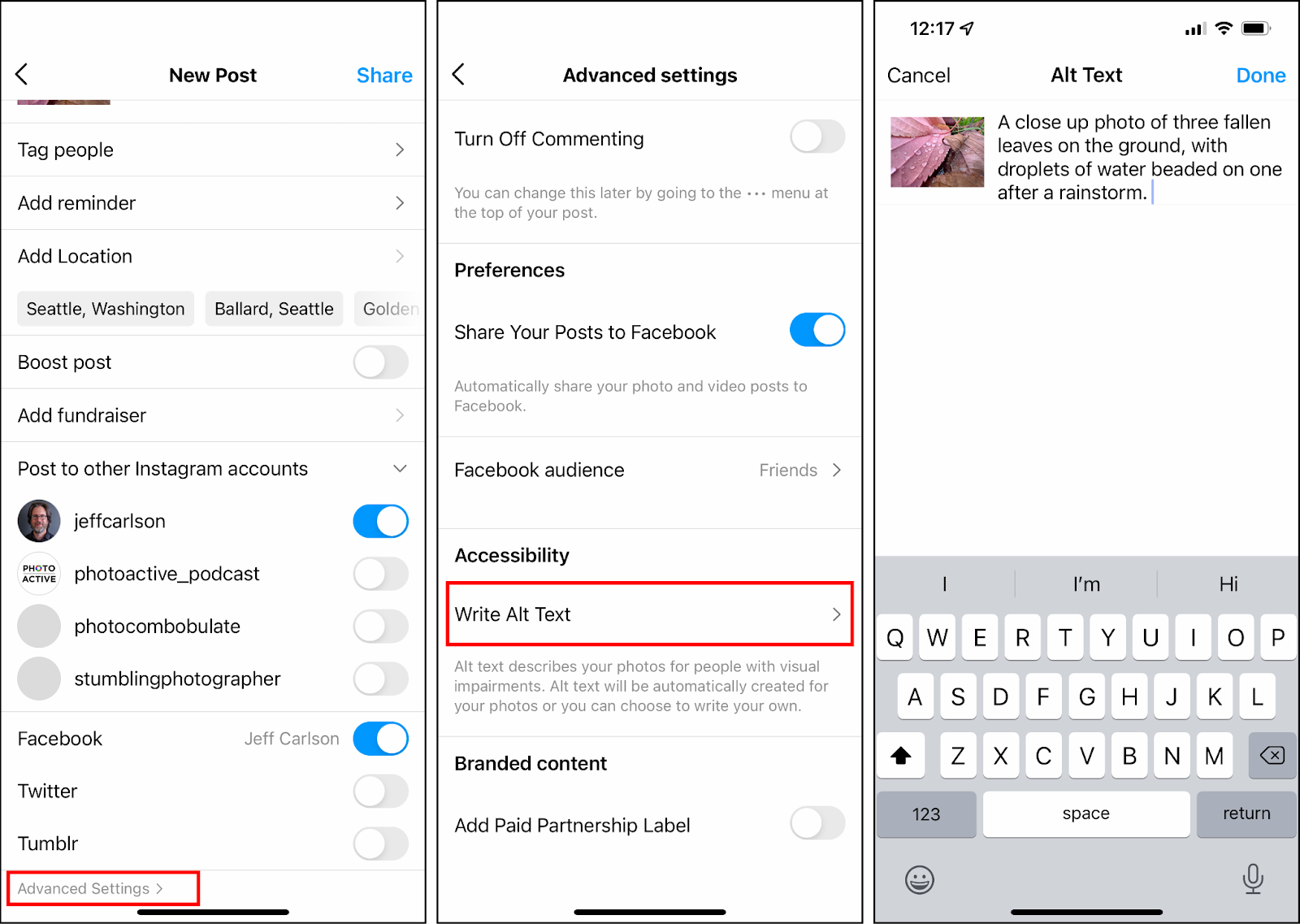

For example, when you add an image to Instagram or Facebook, you can add alt text—though it’s not always obvious how. On Instagram, once you’ve selected a photo and have the option of writing a caption, scroll down to “Advanced Settings,” tap it, and then under “Accessibility” tap “Write Alt Text.”

However, those are additional steps, throwing up barriers that make it less likely that people will create this information.

That being said, Meta, which owns both Instagram and Facebook, is using AI to generate alt text for you. In a blog post from January 2021, the company details “How Facebook is using AI to improve photo descriptions for people who are blind or visually impaired.”

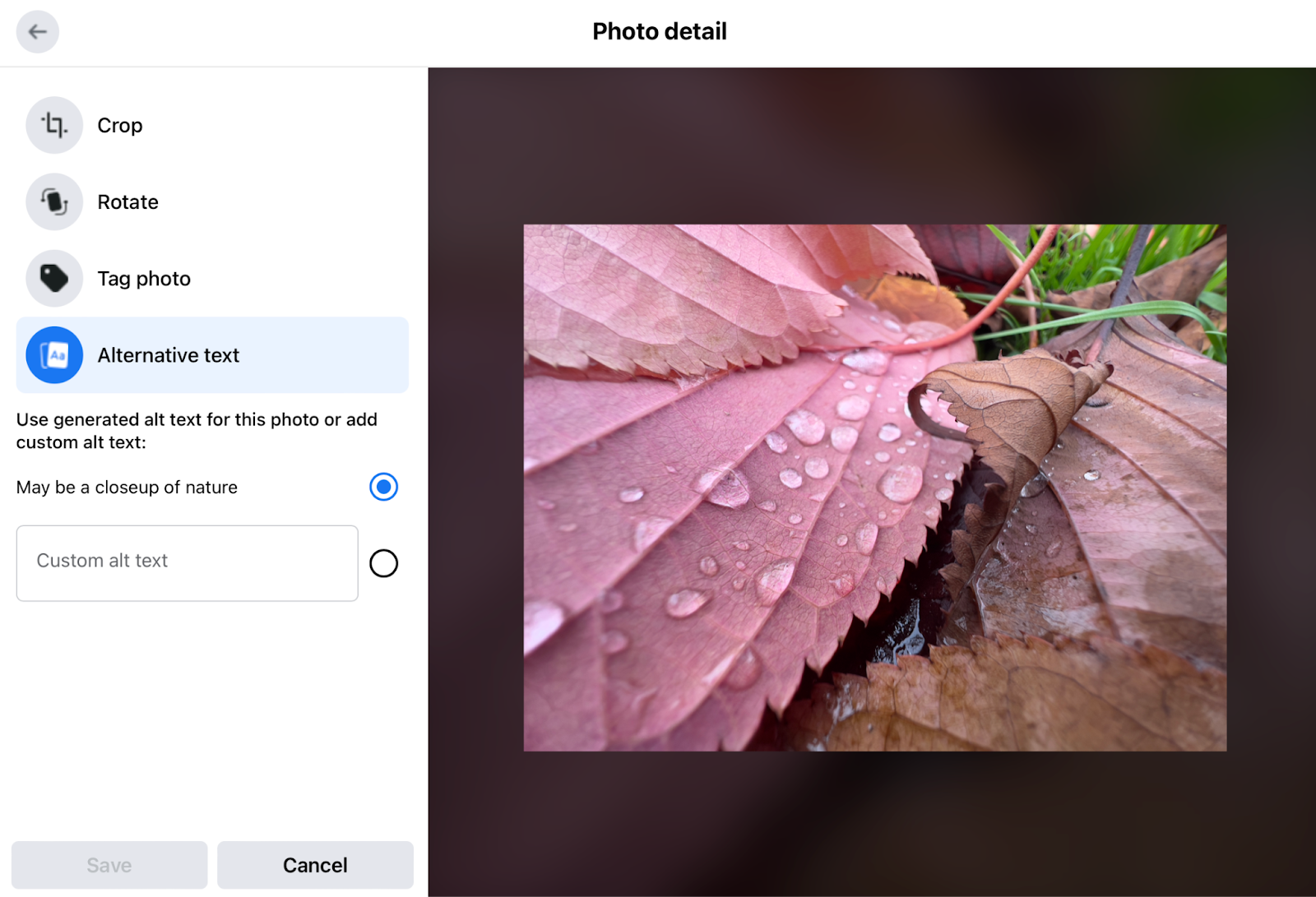

The results can be hit or miss. The alt text for the leaf photo above is described by Facebook as “May be a closeup of nature,” which is technically accurate but not overly helpful.

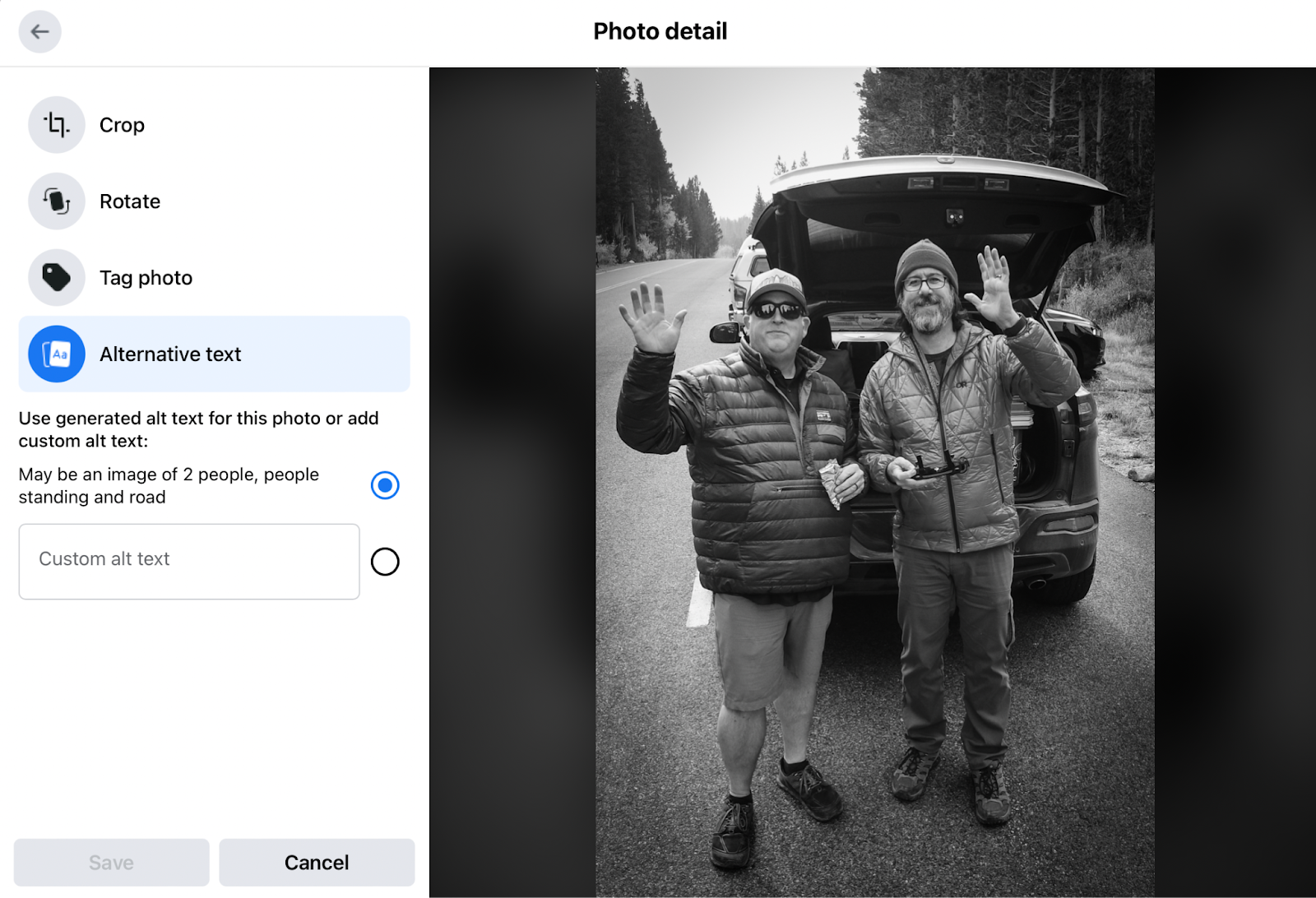

When there are more specific items in the frame, the AI does a bit better. In the image below—an indulgent drone selfie—Facebook came up with “May be an image of 2 people, people standing and road.”

Another example is work being done by Microsoft to use machine learning to create text captions. In a paper last year, researchers presented a process called VIVO (VIsual VOcabulary pretraining) for generating captions with more specificity.

So while there’s progress, there’s also still plenty of room for improvement.

Yes, Automate This Please

Photographers get angsty when faced with the notion that AI might replace them in some way, but creating keywords and writing captions and alt text doesn’t seem to apply in the same way. This is one area where I’m certainly happy to let the machines shoulder some of the work, provided of course that the results are accurate.

The post How to use artificial intelligence to tag and keyword photos for better organization appeared first on Popular Photography.