Have you ever been on a phone call with someone new or had to speak on a video conference to a screen full of faceless squares, with everyone’s appearance left to guesswork? You may not have to use your imagination in these scenarios for much longer. Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have found a way to produce AI-generated faces that render an image based solely on a speaker’s voice. The technology is called Speech2Face and it works eerily well.

The Speech2Face study

A paper on Speech2Face was first published in 2019. In it, the authors acknowledge that they aren’t the first to study the correlation between speech and physical features. An alternative to their method focuses on predetermined elements gleaned from a voice, which are then used to either procure an image or create a rendering.

“This approach limits the predicted face to resemble only a predefined set of attributes,” the researchers noted. They instead wanted their research to be more open-ended, exploring what data their model could extract from audio input.

“Our approach of predicting full visual appearance directly from speech allows us to explore this question without being restricted to predefined facial traits,” they wrote.

In order to perform the study, researchers created a deep neural network to analyze millions of YouTube videos and over 100,000 people speaking. The model learned audiovisual, voice, and face correlations, which helped it to render predictions for attributes such as age, gender, and ethnicity. The training process involved no human involvement—researchers assigned the AI a gigantic set of data to analyze and let it do its thing.

The results

Related: What is computational photography?

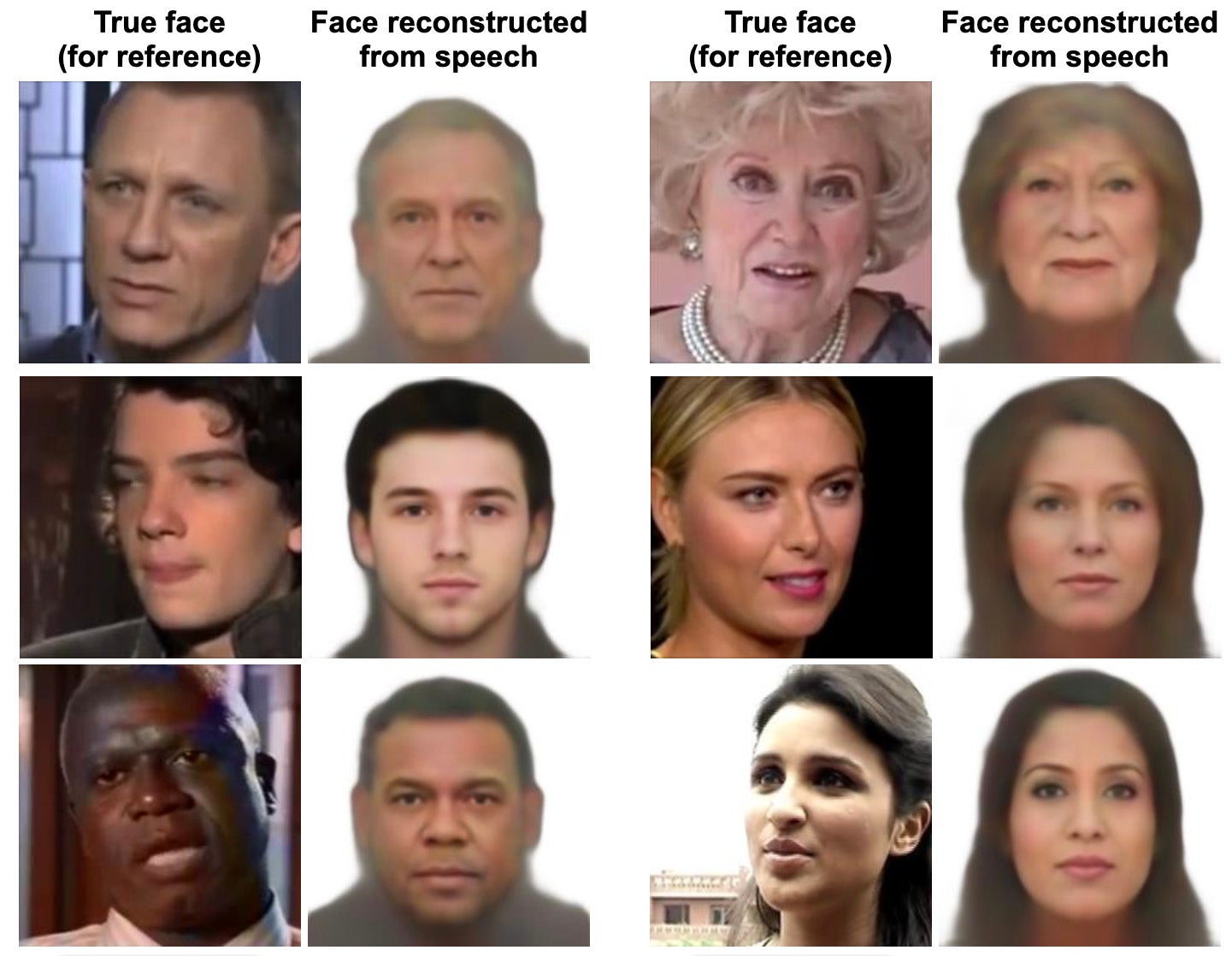

When analyzing the results, the Speech2Face team made sure to clarify that an exact sketch of the speaker was not the end goal.

“Our goal is not to predict a recognizable image of the exact face, but rather to capture dominant facial traits of the person that are correlated with the input speech,” they wrote. “We show that our reconstructed face images can be used as a proxy to convey the visual properties of the person including age, gender, and ethnicity.”

The model was fairly accurate in predicting gender, but had hiccups predicting ethnicity, which they attributed to a lack of data. Language and accent also affected the resulting image, and the behavior of the AI model was mixed, at times producing the correct likeness of the speaker and at others, the completely wrong one.

The researchers envision that eventually, the technology could be implemented to create personalized animations of speakers.

“Such cartoon re-rendering of the face may be useful as a visual representation of a person during a phone or a video-conference call, when the person’s identity is unknown or the person prefers not to share his/her picture,” they wrote. “Our reconstructed faces may also be used directly to assign faces to machine-generated voices used in home devices and virtual assistants.”

The post Is the end of the awkward, faceless Zoom call near? appeared first on Popular Photography.