In a paper published in Scientific Reports earlier this year, researchers at Radboud University in the Netherlands, led by PHD candidate Thirza Dado, combined non-invasive brain imaging and AI-learning models in an attempt to read peoples’ minds—or at least recreate the image they’re looking at. It’s a fascinating experiment, though it’s easy to overstate its success. Still, mind-reading AI might not be as far off as we think.

fMRI and AI imaging

Functional magnetic resonance imaging (fMRI) is a noninvasive technique used to detect brain activity by measuring changes in blood flow to different areas of the brain. It’s been used for the last few decades to identify which parts of the brain are responsible for which functions.

In this study, Dado’s team went a step further. They used an AI model (specifically a Generative Adversarial Network, or GAN) to attempt to interpret the fMRI results and convert the readings back into an image. The results are pretty impressive.

A trained AI

Thirza Dado/Radboud University/Scientific Reports

In the study, Dado’s team showed participants undergoing an fMRI 36 generated faces repeated 14 times for the test set and 1,050 generated faces for the training set (over nine sessions).

Using the fMRI data from the 1,050 unique faces, they trained the AI model to convert the brain imaging results into actual images. (It works like a more primitive version of DALL-E 2 or Stable Diffusion.)

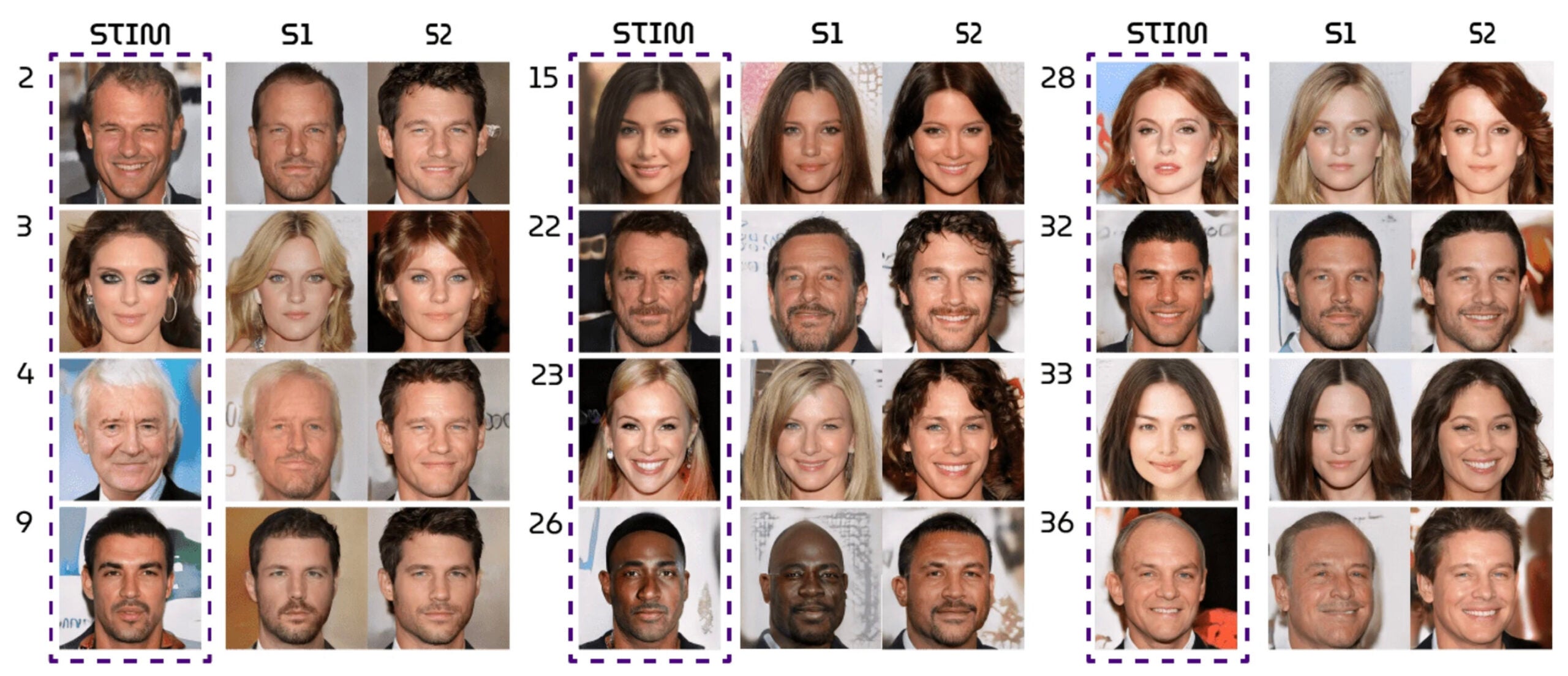

The results of the study, then, are based on the AI model’s interpretation of the fMRI data from the 36 faces in the test set. You can see a sample of them above. The image in the first column is the target image, and the images in the second and third columns are the AI-generated results from the two subjects.

Is this mind reading?

While it’s easy to cherry-pick a few examples where the image (re)created by the AI closely matches the target image, it’s hard to call this mind reading. The results of the study measured the accuracy of the AI in matching the gender, age, and pose, as well as whether the generated face was wearing eyeglasses, and whether the generated face was smiling, not whether or not the generated face was recognizable as the target.

It’s also important to note that the AI was trained on fMRI data from the test subjects. If you or I were to hop into an fMRI machine, the results would likely be incredibly scattershot. We’re still a long way from being able to accurately read anyone’s mind—with or without a car-sized scientific instrument. Still, it’s fascinating to see how AI tools and machine learning can play a role in other areas—rather than just winning fine art competitions.

The post Minding-reading AI creates images from human brainwaves appeared first on Popular Photography.