The concept of selective imaging—the idea that you only keep the parts of an image you need—isn’t new. Blurring, encryption, and in-camera modification are among methods currently available. However, they present problems regarding security, as editing happens post-data collection, meaning RAW files are still vulnerable . A recent study may present a solution. In it, a team at the University of California Los Angeles (UCLA) developed a privacy camera that can erase unwanted information via a technique called light diffraction.

Related: How to use AI to sort and edit your photos faster

Problems with the current technology

Simplistic solutions like using low-resolution files for data capture are impractical as they sacrifice image quality for the entire frame. Certain technologies such as autopilot are unable to operate at that resolution. Additionally, there was the problem of potential information recovery using models that could reconstruct the image. The UCLA team explored a technology that would edit unwanted objects out of a frame before creating a digital file, thus resolving the problem of recording information to RAW files.

“Passively enforcing privacy before the image digitization can potentially provide more desired solutions to both of these challenges outlined earlier,” write the authors of the study.

Related: 1 million lucky creators will beta test AI image generator DALL-E

How does the UCLA privacy camera work?

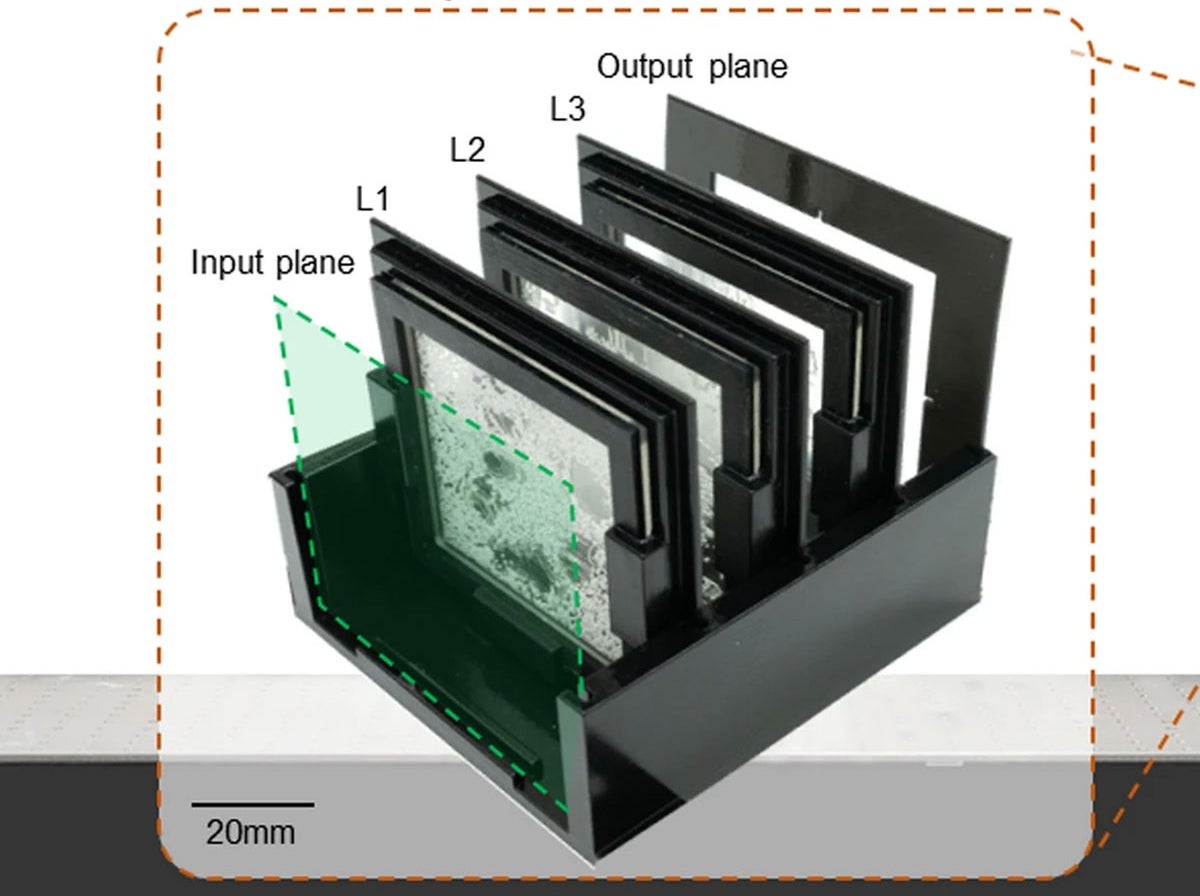

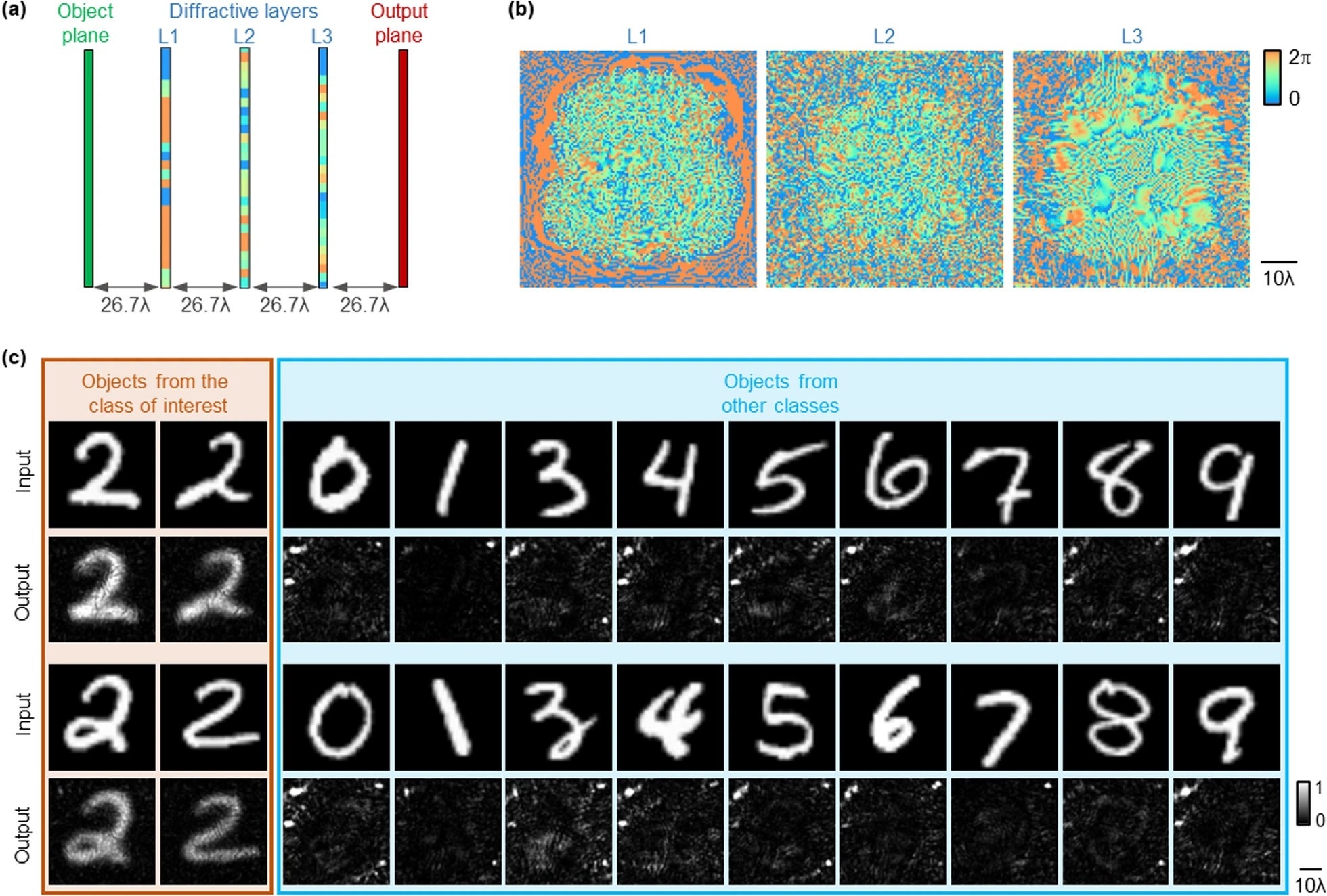

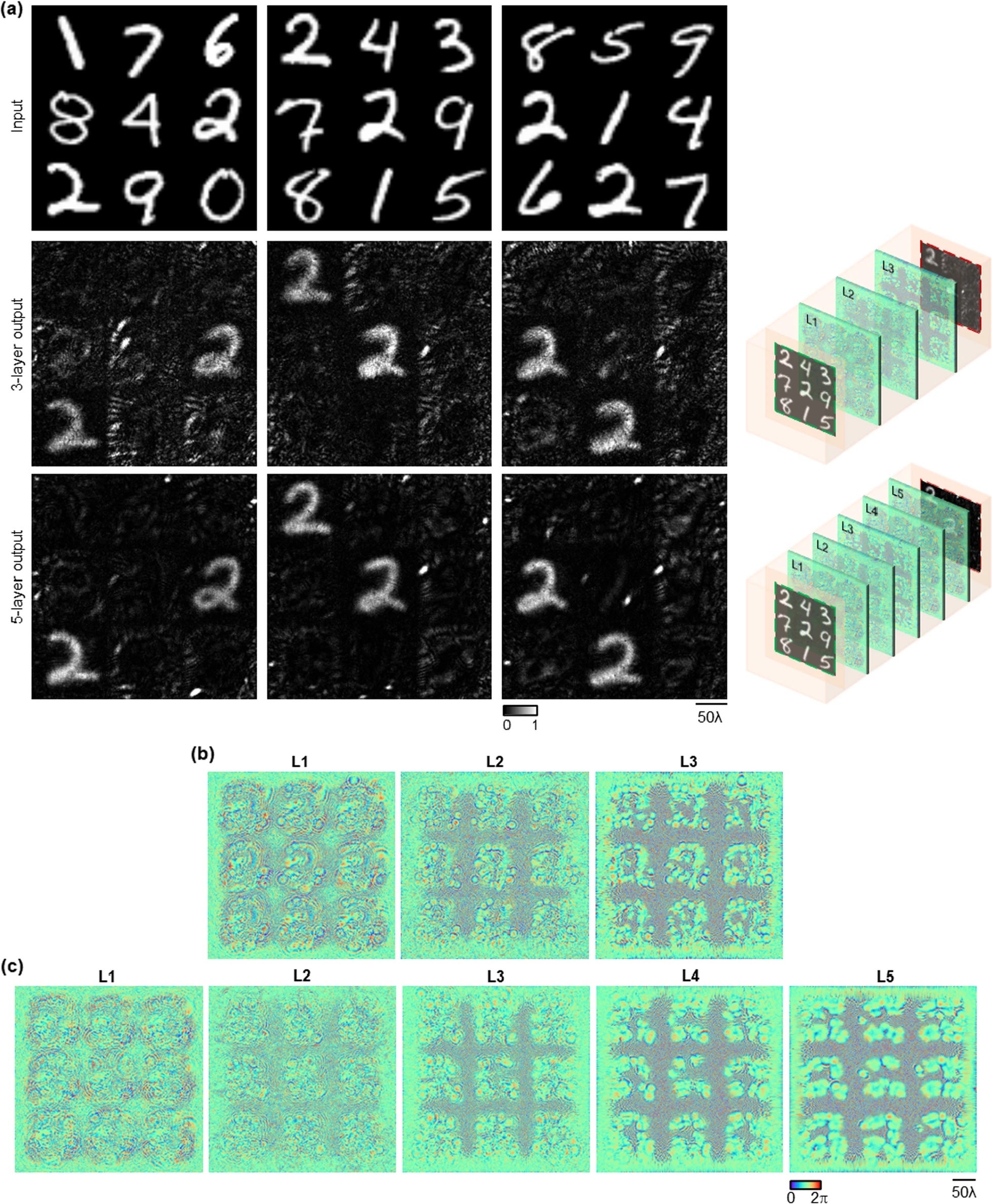

The UCLA privacy camera operates via a concept known as diffractive computing. The process gives the camera the ability to capture what the researchers call “target types” (in their example, a handwritten “2”) with good accuracy. Any input that doesn’t match the target type is erased so that the output only shows the objects of interest. Essentially, the 3D-assembled diffractive layers act as a filter, so only the necessary information can pass through.

As a result, the target types will appear on the output plane, while everything else is rendered as noise (a photographer might liken it to background blur). This method is deemed more secure than others because all the information concerning the non-target types is never recorded. Because of this, the final image requires less storage and transmission effort from the camera.

The UCLA privacy camera experiment and results

To test the idea, the researchers used 3D-printed diffractive layers capable of processing one “target type” from a group of handwritten digits—in this case, the number two. They found that the model prevailed through variations in handwriting, and that it was capable of identifying multiples of the target type in a group.

“Unlike conventional privacy-preserving imaging methods that rely on post-processing of images after their digitization, our diffractive camera design enforces privacy protection by selectively erasing the information of the non-target objects during the light propagation, which reduces the risk of recording sensitive raw image data,” the team concludes.

While the technology is still in the early stages of development, it offers a novel, potential solution for any number of applications, from self-driving cars to surveillance tools. We’re interested to see where it ends up.

The post This camera lets you choose what to photograph—and ignores the rest appeared first on Popular Photography.