Maybe questioning my own intelligence isn’t the best way to kick off this piece, but if I’m going to write a column called “The Smarter Image,” I need to be honest. In many ways, my cameras are smarter than I am—and while that sounds threatening to many photographers, it can be incredibly freeing.

Computational photography is changing the craft of photography in significant ways (see my previous column, “The next age of photography is already here”). As a result, the technology is usually framed as an adversary to traditional photography. If the camera is “doing all the work,” then are we photographers just button pushers? If the camera is making so many decisions about exposure and color, and blending shots to create composite images for greater dynamic range, is there no longer any art to photography?

Getty Images

I’m deliberately exaggerating because we as a species tend to jump to extremes (see also: the world). But extremes also allow us to analyze differences more clearly.

One part of this is our romanticized notion of photography. We hold onto the idea that the camera simply captures the world around us as it is, reflecting the environment and burning those images onto a chemical emulsion or a light-sensitive sensor. In the early days, the photographer needed to choose the exposure, aperture, focus, and film stock—none of those were automated. That engagement with all of those aspects made the process more hands-on, requiring more of the photographer’s skills and experience.

Now, particularly with smartphones, a good photo can be made by just pointing the lens and tapping a button. And in many cases, that photo will have better focus, more accurate color, and an exposure that balances highlights and shadows even in challenging light.

Notice that those examples, both traditional and modern, solve technical problems, not artistic ones. It’s still our job to find good light, work out composition, and capture emotion in subjects. When the camera can take care of the technical, we gain more space to work out the artistic aspects.

Let’s consider some examples of this in action. Currently, you’ll see far more computational photography features in smartphones, but AI is creeping into DSLR and mirrorless systems, too.

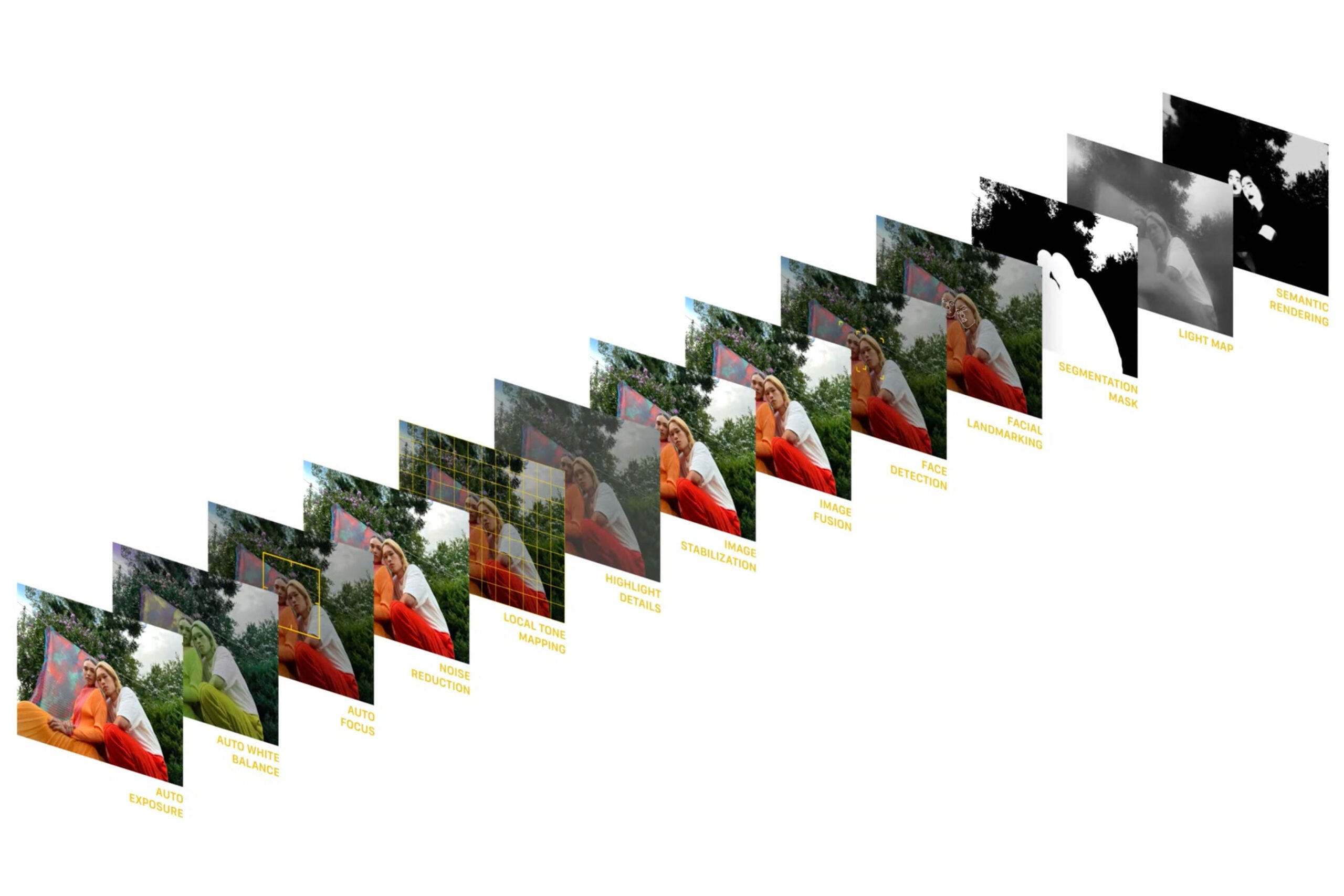

Multi-image fusion

This one feels the most “AI” in terms of being both artificial and intelligent, and yet the results are often quite good. Many smartphones, when you take a picture, record several shots at various exposure levels and merge them together into one composite photo. It’s great for balancing difficult lighting situations and creating sharp images where a long exposure would otherwise make the subject blurry.

Google’s Night Sight feature and Apple’s Night and Deep Fusion modes coax light out of darkness by capturing a series of images at different ISO and exposure settings, then de-noise the pieces and merge the results. It’s how you can get visible low-light photos even when shooting hand-held.

What you don’t get as a photographer is transparency into how the fusion is happening; You can’t reverse-engineer the component parts. Even Apple’s ProRAW format, which combines the advantages of shooting in RAW—greater dynamic range, more data available for editing—with multi-image fusion, creates a de-mosaiced DNG file. It certainly has more information than the regular processed HEIC or JPEG file, but the data isn’t as malleable as it is with a typical RAW file.

Scene recognition

So much of computational photography is the camera understanding what’s in the frame. One obvious example is when a camera detects that a person is in front of the lens, enabling it to focus on the subject’s face or eyes.

Now, more things are actively recognized in a shot. A smartphone can pick out a blue sky and boost its saturation while keeping the color of a grouping of trees in their natural green color instead of drifting toward blue. It can recognize snow scenes, sunsets, urban skylines, and so on, making adjustments in those areas of the scene when writing the image to memory.

Another example is the ability to not just recognize people in a shot, but preserve their skin tones when other areas are being manipulated. The Photographic Styles feature in the iPhone 13 and iPhone 13 Pro lets you choose from a set of looks, such as Vibrant, but it’s smart enough to not make people look as if they’re standing under heat lamps.

Or take a look at Google’s Real Tone technology, a long-overdue way to more accurately measure the skin tones of folks with darker skin tones. For decades, color film was processed using only white-skinned reference images, leading to inaccurate representations of darker skin tones. (I highly recommend the “Shirley Cards” episode of the podcast 99% Invisible for more information.) Google claims that Real Tone more accurately depicts the ranges of skin color.

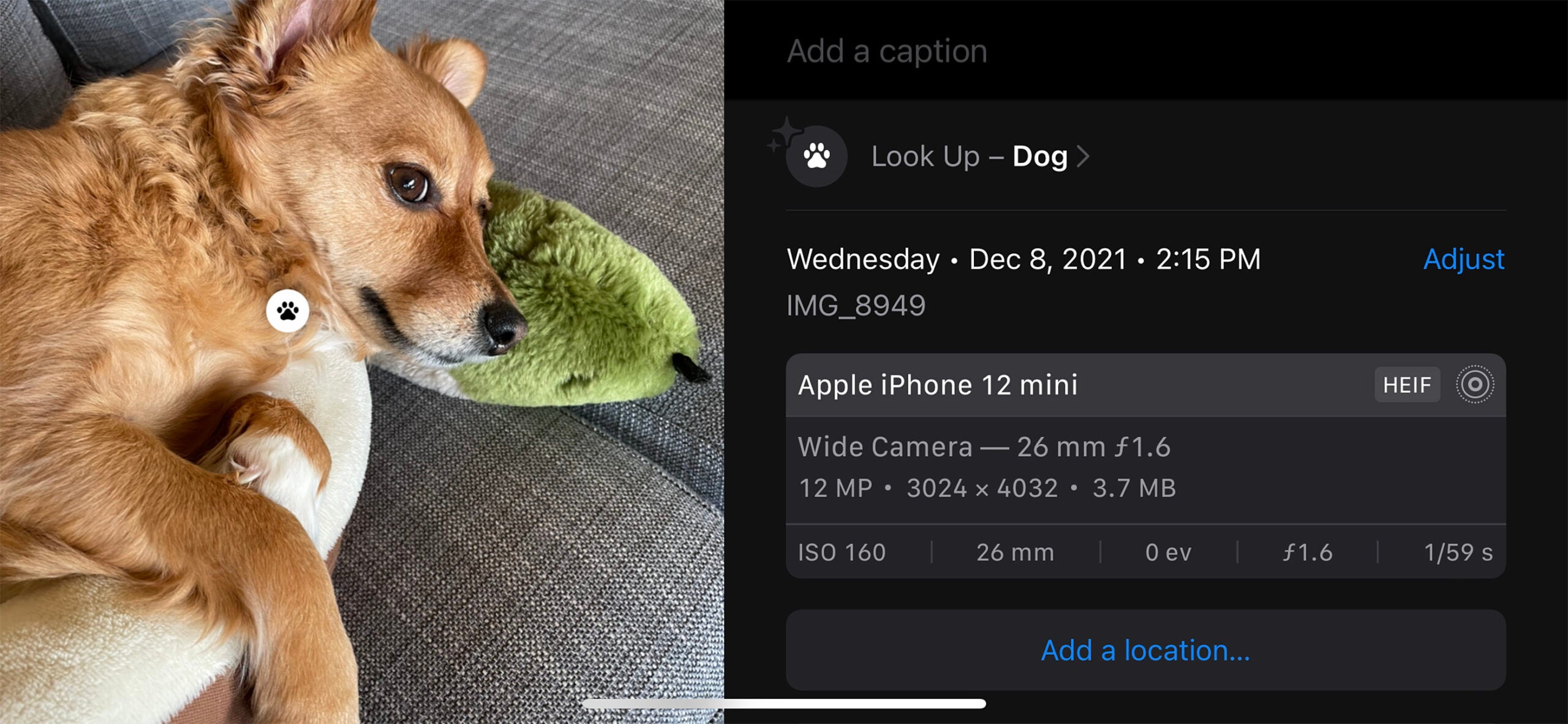

Identifying objects after capture

Time for software to help me mask a shortcoming: I’m terrible at identifying specific trees, flowers, and so many of the things that I photograph. Too often I’ve written captions like, “A bright yellow flower on a field of other flowers of many colors.”

Clearly, I’m not the only one, because image software now helps. When I shoot a picture of nearly any kind of foliage with my iPhone 13 Pro, the Photos app uses machine learning to tell that a type of plant is present. I can then tap to view the possible matches.

This kind of awareness extends to notable geographic locations, dog breeds, bird species, and more. In this sense, the camera (or more accurately, the online database the software is accessing) is smarter than I am, making me seem more knowledgeable—or at least basically competent.

Smarts don’t have to smart

I want to reiterate that all of these features are, for the most part, assisting with the technical aspects of photography. When I’m shooting with my smartphone, I don’t feel like I’ve given up anything. On the contrary, the camera is often making corrections that I would otherwise have to deal with. I get to think more about what’s in the frame instead of how to expose it.

It’s also important to note that alternatives are also close at hand: apps that enable manual controls, shooting in RAW for more editing latitude later, and so on. And hey, you can always pick up a used SLR camera and a few rolls of film and go completely manual.

The post You’re already using computational photography, but that doesn’t mean you’re not a photographer appeared first on Popular Photography.